Remote sensing

This article needs additional citations for verification. (September 2023) |

Remote sensing is the acquisition of information about an object or phenomenon without making physical contact with the object, in contrast to in situ or on-site observation. The term is applied especially to acquiring information about Earth and other planets. Remote sensing is used in numerous fields, including geophysics, geography, land surveying and most Earth science disciplines (e.g. exploration geophysics, hydrology, ecology, meteorology, oceanography, glaciology, geology). It also has military, intelligence, commercial, economic, planning, and humanitarian applications, among others.

In current usage, the term remote sensing generally refers to the use of

Overview

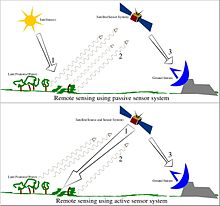

Remote sensing can be divided into two types of methods: Passive remote sensing and Active remote sensing. Passive sensors gather radiation that is emitted or reflected by the object or surrounding areas. Reflected

Remote sensing makes it possible to collect data of dangerous or inaccessible areas. Remote sensing applications include monitoring

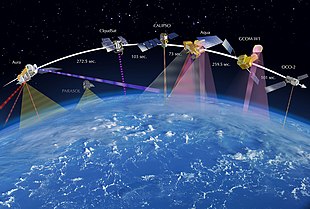

Orbital platforms collect and transmit data from different parts of the

Types of data acquisition techniques

The basis for multispectral collection and analysis is that of examined areas or objects that reflect or emit radiation that stand out from surrounding areas. For a summary of major remote sensing satellite systems see the overview table.

Applications of remote sensing

- Conventional radar is mostly associated with aerial traffic control, early warning, and certain large-scale meteorological data. Magellan).

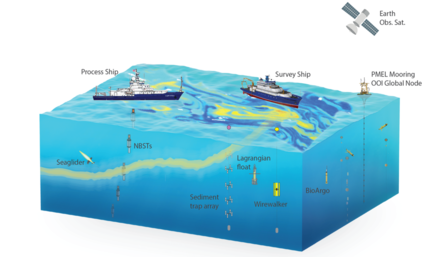

- Laser and radar altimeters on satellites have provided a wide range of data. By measuring the bulges of water caused by gravity, they map features on the seafloor to a resolution of a mile or so. By measuring the height and wavelength of ocean waves, the altimeters measure wind speeds and direction, and surface ocean currents and directions.

- Ultrasound (acoustic) and radar tide gauges measure sea level, tides and wave direction in coastal and offshore tide gauges.

- Light detection and ranging (LIDAR) is well known in examples of weapon ranging, laser illuminated homing of projectiles. LIDAR is used to detect and measure the concentration of various chemicals in the atmosphere, while airborne LIDAR can be used to measure the heights of objects and features on the ground more accurately than with radar technology. Vegetation remote sensing is a principal application of LIDAR.[10]

- emission spectraof various chemicals, providing data on chemical concentrations in the atmosphere.

or interfaced with oceanographic research vessels.[11]

- Radiometers are also used at night, because artificial light emissions are a key signature of human activity.[12] Applications include remote sensing of population, GDP, and damage to infrastructure from war or disasters.

- Radiometers and radar onboard of satellites can be used to monitor volcanic eruptions [13][14]

- aerial photographs have often been used to make topographic maps by imagery and terrain analysts in trafficability and highway departments for potential routes, in addition to modelling terrestrial habitat features.[17][18][19]

- Simultaneous multi-spectral platforms such as Landsat have been in use since the 1970s. These thematic mappers take images in multiple wavelengths of electromagnetic radiation (multi-spectral) and are usually found on IKONOS satellite. Maps of land cover and land use from thematic mapping can be used to prospect for minerals, detect or monitor land usage, detect invasive vegetation, deforestation, and examine the health of indigenous plants and crops (satellite crop monitoring), including entire farming regions or forests.[20] Prominent scientists using remote sensing for this purpose include Janet Franklin and Ruth DeFries. Landsat images are used by regulatory agencies such as KYDOW to indicate water quality parameters including Secchi depth, chlorophyll density, and total phosphorus content. Weather satellitesare used in meteorology and climatology.

- Hyperspectral imaging produces an image where each pixel has full spectral information with imaging narrow spectral bands over a contiguous spectral range. Hyperspectral imagers are used in various applications including mineralogy, biology, defence, and environmental measurements.

- Within the scope of the combat against desertification, remote sensing allows researchers to follow up and monitor risk areas in the long term, to determine desertification factors, to support decision-makers in defining relevant measures of environmental management, and to assess their impacts.[21]

- Remotely sensed multi- and hyperspectral images can be used for assessing biodiversity at different scales. Since the spectral properties of different plants species are unique, it is possible to get information about properties that relates to biodiversity such as habitat heterogeneity, spectral diversity and plant functional trait.[22][23][24]

- Remote sensing has been used to detect rare plants to aid in conservation efforts. Prediction, detection, and the ability to record biophysical conditions were possible from medium to very high resolutions.[25]

- Agricultural and environmental statistics, usually combining classified satellite images with ground truth data collected on a sample selected on an area sampling frame[26]

Geodetic

- InSAR, LIDAR, etc.[27]

Acoustic and near-acoustic

- Sonar: passive sonar, listening for the sound made by another object (a vessel, a whale etc.); active sonar, emitting pulses of sounds and listening for echoes, used for detecting, ranging and measurements of underwater objects and terrain.

- Seismograms taken at different locations can locate and measure earthquakes(after they occur) by comparing the relative intensity and precise timings.

- Ultrasound: Ultrasound sensors, that emit high-frequency pulses and listening for echoes, used for detecting water waves and water level, as in tide gauges or for towing tanks.

To coordinate a series of large-scale observations, most sensing systems depend on the following: platform location and the orientation of the sensor. High-end instruments now often use positional information from

Data characteristics

The quality of remote sensing data consists of its spatial, spectral, radiometric and temporal resolutions.

- Spatial resolution

- The size of a pixel that is recorded in a raster image – typically pixels may correspond to square areas ranging in side length from 1 to 1,000 metres (3.3 to 3,280.8 ft).

- Spectral resolution

- The wavelength of the different frequency bands recorded – usually, this is related to the number of frequency bands recorded by the platform. Current Landsat collection is that of seven bands, including several in the infraredspectrum, ranging from a spectral resolution of 0.7 to 2.1 μm. The Hyperion sensor on Earth Observing-1 resolves 220 bands from 0.4 to 2.5 μm, with a spectral resolution of 0.10 to 0.11 μm per band.

- Radiometric resolution

- The number of different intensities of radiation the sensor is able to distinguish. Typically, this ranges from 8 to 14 bits, corresponding to 256 levels of the gray scale and up to 16,384 intensities or "shades" of colour, in each band. It also depends on the instrument noise.

- Temporal resolution

- The frequency of flyovers by the satellite or plane, and is only relevant in time-series studies or those requiring an averaged or mosaic image as in deforesting monitoring. This was first used by the intelligence community where repeated coverage revealed changes in infrastructure, the deployment of units or the modification/introduction of equipment. Cloud cover over a given area or object makes it necessary to repeat the collection of said location.

Data processing

In order to create sensor-based maps, most remote sensing systems expect to extrapolate sensor data in relation to a reference point including distances between known points on the ground. This depends on the type of sensor used. For example, in conventional photographs, distances are accurate in the center of the image, with the distortion of measurements increasing the farther you get from the center. Another factor is that of the platen against which the film is pressed can cause severe errors when photographs are used to measure ground distances. The step in which this problem is resolved is called

In addition, images may need to be radiometrically and atmospherically corrected.

- Radiometric correction

- Allows avoidance of radiometric errors and distortions. The illumination of objects on the Earth's surface is uneven because of different properties of the relief. This factor is taken into account in the method of radiometric distortion correction.[28] Radiometric correction gives a scale to the pixel values, e. g. the monochromatic scale of 0 to 255 will be converted to actual radiance values.

- Topographic correction (also called terrain correction)

- In rugged mountains, as a result of terrain, the effective illumination of pixels varies considerably. In a remote sensing image, the pixel on the shady slope receives weak illumination and has a low radiance value, in contrast, the pixel on the sunny slope receives strong illumination and has a high radiance value. For the same object, the pixel radiance value on the shady slope will be different from that on the sunny slope. Additionally, different objects may have similar radiance values. These ambiguities seriously affected remote sensing image information extraction accuracy in mountainous areas. It became the main obstacle to the further application of remote sensing images. The purpose of topographic correction is to eliminate this effect, recovering the true reflectivity or radiance of objects in horizontal conditions. It is the premise of quantitative remote sensing application.

- Atmospheric correction

- Elimination of atmospheric haze by rescaling each frequency band so that its minimum value (usually realised in water bodies) corresponds to a pixel value of 0. The digitizing of data also makes it possible to manipulate the data by changing gray-scale values.

Interpretation is the critical process of making sense of the data. The first application was that of aerial photographic collection which used the following process; spatial measurement through the use of a light table in both conventional single or stereographic coverage, added skills such as the use of photogrammetry, the use of photomosaics, repeat coverage, Making use of objects' known dimensions in order to detect modifications. Image Analysis is the recently developed automated computer-aided application that is in increasing use.

Object-Based Image Analysis (OBIA) is a sub-discipline of GIScience devoted to partitioning remote sensing (RS) imagery into meaningful image-objects, and assessing their characteristics through spatial, spectral and temporal scale.

Old data from remote sensing is often valuable because it may provide the only long-term data for a large extent of geography. At the same time, the data is often complex to interpret, and bulky to store. Modern systems tend to store the data digitally, often with

Generally speaking, remote sensing works on the principle of the inverse problem: while the object or phenomenon of interest (the state) may not be directly measured, there exists some other variable that can be detected and measured (the observation) which may be related to the object of interest through a calculation. The common analogy given to describe this is trying to determine the type of animal from its footprints. For example, while it is impossible to directly measure temperatures in the upper atmosphere, it is possible to measure the spectral emissions from a known chemical species (such as carbon dioxide) in that region. The frequency of the emissions may then be related via thermodynamics to the temperature in that region.

Data processing levels

To facilitate the discussion of data processing in practice, several processing "levels" were first defined in 1986 by NASA as part of its Earth Observing System[29] and steadily adopted since then, both internally at NASA (e. g.,[30]) and elsewhere (e. g.,[31]); these definitions are:

| Level | Description |

|---|---|

| 0 | Reconstructed, unprocessed instrument and payload data at full resolution, with any and all communications artifacts (e. g., synchronization frames, communications headers, duplicate data) removed. |

| 1a | Reconstructed, unprocessed instrument data at full resolution, time-referenced, and annotated with ancillary information, including radiometric and geometric calibration coefficients and georeferencing parameters (e. g., platform ephemeris) computed and appended but not applied to the Level 0 data (or if applied, in a manner that level 0 is fully recoverable from level 1a data). |

| 1b | Level 1a data that have been processed to sensor units (e. g., radar backscatter cross section, brightness temperature, etc.); not all instruments have Level 1b data; level 0 data is not recoverable from level 1b data. |

| 2 | Derived geophysical variables (e. g., ocean wave height, soil moisture, ice concentration) at the same resolution and location as Level 1 source data. |

| 3 | Variables mapped on uniform spacetime grid scales, usually with some completeness and consistency (e. g., missing points interpolated, complete regions mosaicked together from multiple orbits, etc.). |

| 4 | Model output or results from analyses of lower level data (i. e., variables that were not measured by the instruments but instead are derived from these measurements). |

A Level 1 data record is the most fundamental (i. e., highest reversible level) data record that has significant scientific utility, and is the foundation upon which all subsequent data sets are produced. Level 2 is the first level that is directly usable for most scientific applications; its value is much greater than the lower levels. Level 2 data sets tend to be less voluminous than Level 1 data because they have been reduced temporally, spatially, or spectrally. Level 3 data sets are generally smaller than lower level data sets and thus can be dealt with without incurring a great deal of data handling overhead. These data tend to be generally more useful for many applications. The regular spatial and temporal organization of Level 3 datasets makes it feasible to readily combine data from different sources.

While these processing levels are particularly suitable for typical satellite data processing pipelines, other data level vocabularies have been defined and may be appropriate for more heterogeneous workflows.

Applications

Satellite images provide very useful information to produce statistics on topics closely related to the territory, such as agriculture, forestry or land cover in general. The first large project to apply Landsata 1 images for statistics was LACIE (Large Area Crop Inventory Experiment), run by NASA, NOAA and the USDA in 1974–77.[32][33] Many other application projects on crop area estimation have followed, including the Italian AGRIT project and the MARS project of the Joint Research Centre (JRC) of the European Commission.[34] Forest area and deforestation estimation have also been a frequent target of remote sensing projects,[35][36] the same as land cover and land use[37]

Ground truth or reference data to train and validate image classification require a field survey if we are targetting annual crops or individual forest species, but may be substituted by photointerpretation if we look at wider classes that can be reliably identified on aerial photos or satellite images. It is relevant to highlight that probabilistic sampling is not critical for the selection of training pixels for image classification, but it is necessary for accuracy assessment of the classified images and area estimation.[38][39][40] Additional care is recommended to ensure that training and validation datasets are not spatially correlated.[41]

We suppose now that we have classified images or a land cover map produced by visual photo-interpretation, with a legend of mapped classes that suits our purpose, taking again the example of wheat. The straightforward approach is counting the number of pixels classified as wheat and multiplying by the area of each pixel. Many authors have noticed that estimator is that it is generally biased because commission and omission errors in a confusion matrix do not compensate each other [42][43][44]

The main strength of classified satellite images or other indicators computed on satellite images is providing cheap information on the whole target area or most of it. This information usually has a good correlation with the target variable (ground truth) that is usually expensive to observe in an unbiased and accurate way. Therefore it can be observed on a probabilistic sample selected on an area sampling frame. Traditional survey methodology provides different methods to combine accurate information on a sample with less accurate, but exhaustive, data for a covariable or proxy that is cheaper to collect. For agricultural statistics, field surveys are usually required, while photo-interpretation may better for land cover classes that can be reliably identified on aerial photographs or high resolution satellite images. Additional uncertainty can appear because of imperfect reference data (ground truth or similar).[45][46]

Some options are: ratio estimator, regression estimator,[47] calibration estimators[48] and small area estimators[37]

If we target other variables, such as crop yield or leaf area, we may need different indicators to be computed from images, such as the NDVI, a good proxy to chlorophyll activity.[49]

History

The modern discipline of remote sensing arose with the development of flight. The balloonist G. Tournachon (alias

Systematic aerial photography was developed for military surveillance and reconnaissance purposes beginning in World War I.[51] After WWI, remote sensing technology was quickly adapted to civilian applications.[52] This is demonstrated by the first line of a 1941 textbook titled "Aerophotography and Aerosurverying," which stated the following:

"There is no longer any need to preach for aerial photography-not in the United States- for so widespread has become its use and so great its value that even the farmer who plants his fields in a remote corner of the country knows its value."

— James Bagley, [52]

The development of remote sensing technology reached a climax during the

The development of artificial satellites in the latter half of the 20th century allowed remote sensing to progress to a global scale as of the end of the Cold War.

Recent developments include, beginning in the 1960s and 1970s, the development of

Training and education

Remote Sensing has a growing relevance in the modern information society. It represents a key technology as part of the aerospace industry and bears increasing economic relevance – new sensors e.g.

Many teachers have great interest in the subject "remote sensing", being motivated to integrate this topic into teaching, provided that the curriculum is considered. In many cases, this encouragement fails because of confusing information.

Software

Remote sensing data are processed and analyzed with computer software, known as a

Remote Sensing with gamma rays

There are applications of gamma rays to mineral exploration through remote sensing. In 1972 more than two million dollars were spent on remote sensing applications with gamma rays to mineral exploration. Gamma rays are used to search for deposits of uranium. By observing radioactivity from potassium, porphyry copper deposits can be located. A high ratio of uranium to thorium has been found to be related to the presence of hydrothermal copper deposits. Radiation patterns have also been known to occur above oil and gas fields, but some of these patterns were thought to be due to surface soils instead of oil and gas.[71]

Satellites

An

The first occurrence of satellite remote sensing can be dated to the launch of the first artificial satellite, Sputnik 1, by the Soviet Union on October 4, 1957.[72] Sputnik 1 sent back radio signals, which scientists used to study the ionosphere.[73] The United States Army Ballistic Missile Agency launched the first American satellite, Explorer 1, for NASA's Jet Propulsion Laboratory on January 31, 1958. The information sent back from its radiation detector led to the discovery of the Earth's Van Allen radiation belts.[74] The TIROS-1 spacecraft, launched on April 1, 1960, as part of NASA's Television Infrared Observation Satellite (TIROS) program, sent back the first television footage of weather patterns to be taken from space.[72]

In 2008, more than 150 Earth observation satellites were in orbit, recording data with both passive and active sensors and acquiring more than 10 terabits of data daily.[72] By 2021, that total had grown to over 950, with the largest number of satellites operated by US-based company Planet Labs.[75]

Most

To get global coverage with a low orbit, a polar orbit is used. A low orbit will have an orbital period of roughly 100 minutes and the Earth will rotate around its polar axis about 25° between successive orbits. The ground track moves towards the west 25° each orbit, allowing a different section of the globe to be scanned with each orbit. Most are in Sun-synchronous orbits.

A geostationary orbit, at 36,000 km (22,000 mi), allows a satellite to hover over a constant spot on the earth since the orbital period at this altitude is 24 hours. This allows uninterrupted coverage of more than 1/3 of the Earth per satellite, so three satellites, spaced 120° apart, can cover the whole Earth. This type of orbit is mainly used for meteorological satellites.See also

- Airborne Real-time Cueing Hyperspectral Enhanced Reconnaissance

- American Society for Photogrammetry and Remote Sensing

- Archaeological imagery

- Asian Association on Remote Sensing

- CLidar

- Coastal management

- Crateology

- First images of Earth from space

- Full spectral imaging

- Geographic information system (GIS)

- GIS and hydrology

- Geoinformatics

- Geophysical survey

- Global Positioning System (GPS)

- Ground truth § Remote sensing

- IEEE Geoscience and Remote Sensing Society

- Image mosaic

- Imagery analysis

- Imaging science

- International Society for Photogrammetry and Remote Sensing

- Land change science

- Liquid crystal tunable filter

- List of Earth observation satellites

- Mobile mapping

- Multispectral pattern recognition

- National Center for Remote Sensing, Air and Space Law

- National LIDAR Dataset

- Normalized difference water index

- Orthophoto

- Pictometry

- Radiometry

- Remote monitoring and control

- Technical geography

- TopoFlight

- Vector Map

References

- ISBN 978-0-12-369407-2. Archivedfrom the original on 1 May 2016. Retrieved 15 November 2015.

- ISBN 978-0-19-517817-3. Archivedfrom the original on 24 April 2016. Retrieved 15 November 2015.

- (PDF) from the original on 19 July 2018. Retrieved 27 October 2021.

- ISBN 978-0-470-51032-2. Retrieved 2 April 2023.

- ^ "Saving the monkeys". SPIE Professional. Archived from the original on 4 February 2016. Retrieved 1 January 2016.

- S2CID 120031016.

- .

- S2CID 44706251.

- ^ "Science@nasa - Technology: Remote Sensing". Archived from the original on 29 September 2006. Retrieved 18 February 2009.

- .

- ^ Just Sit Right Back and You'll Hear a Tale, a Tale of a Plankton Trip Archived 10 August 2021 at the Wayback Machine NASA Earth Expeditions, 15 August 2018.

- S2CID 214254543.

- .

- .

- ^ Goldberg, A.; Stann, B.; Gupta, N. (July 2003). "Multispectral, Hyperspectral, and Three-Dimensional Imaging Research at the U.S. Army Research Laboratory" (PDF). Proceedings of the International Conference on International Fusion [6th]. 1: 499–506.

- ISSN 0924-2716.

- S2CID 140189982.

- .

- (PDF) from the original on 13 July 2021. Retrieved 27 October 2021.

- .

- ^ "Begni G. Escadafal R. Fontannaz D. and Hong-Nga Nguyen A.-T. (2005). Remote sensing: a tool to monitor and assess desertification. Les dossiers thématiques du CSFD. Issue 2. 44 pp". Archived from the original on 26 May 2019. Retrieved 27 October 2021.

- S2CID 197567301.

- S2CID 59446258.

- S2CID 256718584.

- S2CID 233886263.

- .

- ^ "Geodetic Imaging". Archived from the original on 2 October 2016. Retrieved 29 September 2016.

- .

- ^ NASA (1986), Report of the EOS data panel, Earth Observing System, Data and Information System, Data Panel Report, Vol. IIa., NASA Technical Memorandum 87777, June 1986, 62 pp. Available at http://hdl.handle.net/2060/19860021622 Archived 27 October 2021 at the Wayback Machine

- ^ C. L. Parkinson, A. Ward, M. D. King (Eds.) Earth Science Reference Handbook – A Guide to NASA's Earth Science Program and Earth Observing Satellite Missions, National Aeronautics and Space Administration Washington, D. C. Available at http://eospso.gsfc.nasa.gov/ftp_docs/2006ReferenceHandbook.pdf Archived 15 April 2010 at the Wayback Machine

- ^ GRAS-SAF (2009), Product User Manual, GRAS Satellite Application Facility, Version 1.2.1, 31 March 2009. Available at http://www.grassaf.org/general-documents/products/grassaf_pum_v121.pdf Archived 26 July 2011 at the Wayback Machine

- ^ Houston, A.H. "Use of satellite data in agricultural surveys". Communications in Statistics. Theory and Methods (23): 2857–2880.

- ^ Allen, J.D. "A Look at the Remote Sensing Applications Program of the National Agricultural Statistics Service". Journal of Official Statistics. 6 (4): 393–409.

- ^ Taylor, J (1997). Regional Crop Inventories in Europe Assisted by Remote Sensing: 1988-1993. Synthesis Report. Luxembourg: Office for Pubblications of the EC.

- JSTOR 2845527.

- PMID 12169731.

- ^ .

- ISBN 978-0-429-05272-9.)

{{cite book}}: CS1 maint: multiple names: authors list (link - .

- .

- .

- ^ Czaplewski, R.L. "Misclassification bias in areal estimates". Photogrammetric Engineering and Remote Sensing (39): 189–192.

- ^ Bauer, M.E. (1978). "Area estimation of crops by digital analysis of Landsat data". Photogrammetric Engineering and Remote Sensing (44): 1033–1043.

- .

- .

- .

- .

- .

- .

- ^ Maksel, Rebecca. "Flight of the Giant". Air & Space Magazine. Archived from the original on 18 August 2021. Retrieved 19 February 2019.

- ISSN 0307-1235. Archived from the originalon 18 April 2014. Retrieved 19 February 2019.

- ^ a b Bagley, James (1941). Aerophotography and Aerosurverying (1st ed.). York, PA: The Maple Press Company.

- ^ "Air Force Magazine". www.airforcemag.com. Archived from the original on 19 February 2019. Retrieved 19 February 2019.

- ^ "Military Imaging and Surveillance Technology (MIST)". www.darpa.mil. Archived from the original on 18 August 2021. Retrieved 19 February 2019.

- .

- ^ "In Depth | Magellan". Solar System Exploration: NASA Science. Archived from the original on 19 October 2021. Retrieved 19 February 2019.

- ^ Garner, Rob (15 April 2015). "SOHO - Solar and Heliospheric Observatory". NASA. Archived from the original on 18 September 2021. Retrieved 19 February 2019.

- ISBN 978-1-60918-176-5.

- ISBN 978-0-9866376-0-5.

- ^ Fussell, Jay; Rundquist, Donald; Harrington, John A. (September 1986). "On defining remote sensing" (PDF). Photogrammetric Engineering and Remote Sensing. 52 (9): 1507–1511. Archived from the original (PDF) on 4 October 2021.

- JSTOR 2569553.

- ^ Colen, Jerry (8 April 2015). "Ames Research Center Overview". NASA. Archived from the original on 28 September 2021. Retrieved 19 February 2019.

- ^ Ditter, R., Haspel, M., Jahn, M., Kollar, I., Siegmund, A., Viehrig, K., Volz, D., Siegmund, A. (2012) Geospatial technologies in school – theoretical concept and practical implementation in K-12 schools. In: International Journal of Data Mining, Modelling and Management (IJDMMM): FutureGIS: Riding the Wave of a Growing Geospatial Technology Literate Society; Vol. X

- ^ Stork, E.J., Sakamoto, S.O., and Cowan, R.M. (1999) "The integration of science explorations through the use of earth images in middle school curriculum", Proc. IEEE Trans. Geosci. Remote Sensing 37, 1801–1817

- S2CID 62414447.

- ^ Digital Earth

- ^ "FIS – Remote Sensing in School Lessons". Archived from the original on 26 October 2012. Retrieved 25 October 2012.

- ^ "geospektiv". Archived from the original on 2 May 2018. Retrieved 1 June 2018.

- ^ "YCHANGE". Archived from the original on 17 August 2018. Retrieved 1 June 2018.

- ^ "Landmap – Spatial Discovery". Archived from the original on 29 November 2014. Retrieved 27 October 2021.

- ISBN 978-3-642-66238-6.

- ^ PMID 19498953.

- .

- ^ "James A. Van Allen". nmspacemuseum.org. New Mexico Museum of Space History. Retrieved 14 May 2018.

- ^ "How many Earth observation satellites are orbiting the planet in 2021?". 18 August 2021.

- ^ "DubaiSat-2, Earth Observation Satellite of UAE". Mohammed Bin Rashid Space Centre. Archived from the original on 17 January 2019. Retrieved 4 July 2016.

- ^ "DubaiSat-1, Earth Observation Satellite of UAE". Mohammed Bin Rashid Space Centre. Archived from the original on 4 March 2016. Retrieved 4 July 2016.

Further reading

- Campbell, J. B. (2002). Introduction to remote sensing (3rd ed.). The Guilford Press. ISBN 978-1-57230-640-0.

- Datla, R.U.; Rice, J.P.; Lykke, K.R.; Johnson, B.C.; Butler, J.J.; Xiong, X. (March–April 2011). "Best practice guidelines for pre-launch characterization and calibration of instruments for passive optical remote sensing". Journal of Research of the National Institute of Standards and Technology. 116 (2): 612–646. PMID 26989588.

- Dupuis, C.; Lejeune, P.; Michez, A.; Fayolle, A. How Can Remote Sensing Help Monitor Tropical Moist Forest Degradation?—A Systematic Review. Remote Sens. 2020, 12, 1087. https://www.mdpi.com/2072-4292/12/7/1087

- Jensen, J. R. (2007). Remote sensing of the environment: an Earth resource perspective (2nd ed.). Prentice Hall. ISBN 978-0-13-188950-7.

- Jensen, J. R. (2005). Digital Image Processing: a Remote Sensing Perspective (3rd ed.). Prentice Hall.

- KUENZER, C. ZHANG, J., TETZLAFF, A., and S. DECH, 2013: Thermal Infrared Remote Sensing of Surface and underground Coal Fires. In (eds.) Kuenzer, C. and S. Dech 2013: Thermal Infrared Remote Sensing – Sensors, Methods, Applications. Remote Sensing and Digital Image Processing Series, Volume 17, 572 pp., ISBN 978-94-007-6638-9, pp. 429–451

- Kuenzer, C. and S. Dech 2013: Thermal Infrared Remote Sensing – Sensors, Methods, Applications. Remote Sensing and Digital Image Processing Series, Volume 17, 572 pp., ISBN 978-94-007-6638-9

- Lasaponara, R. and ISBN 978-90-481-8801-7.

- Lentile, Leigh B.; Holden, Zachary A.; Smith, Alistair M. S.; Falkowski, Michael J.; Hudak, Andrew T.; Morgan, Penelope; Lewis, Sarah A.; Gessler, Paul E.; Benson, Nate C. (2006). "Remote sensing techniques to assess active fire characteristics and post-fire effects". International Journal of Wildland Fire. 3 (15): 319–345. S2CID 724358. Archived from the originalon 12 August 2014. Retrieved 4 February 2010.

- Lillesand, T. M.; R. W. Kiefer; J. W. Chipman (2003). Remote sensing and image interpretation (5th ed.). Wiley. ISBN 978-0-471-15227-9.

- Richards, J. A.; X. Jia (2006). Remote sensing digital image analysis: an introduction (4th ed.). Springer. ISBN 978-3-540-25128-6.

External links

Media related to Remote sensing at Wikimedia Commons

Media related to Remote sensing at Wikimedia Commons- Remote Sensing at Curlie